Solution

A Full-stack Computational Sensing Platform

Perceptive solves sensing with a computational sensing platform designed and built like a computer stack — the quintessential scalable machine. This approach redefines the architecture and roles of sensing hardware, compute, and software.

Cloud

PerceptiveCloud

Apps

Perceptive Sensing

Abstraction Layer

PerceptiveOS

Hardware

Perceptive Universal Sensor

Perceptive’s Universal Sensing Hardware subsystem serves as the foundation, measuring high-fidelity signals from the environment and collecting ultra-raw data — with processing taking place higher in the stack. This architecture is fundamentally different from today’s sensing systems that silo data from sensors and process it on the spot, essentially discarding 99% of the information received.

The Hardware Abstraction Layer (HAL) sits on top of the hardware subsystem to derive abstract features from raw signals and cast them into a unified, multi-dimensional virtual space. This creates a universal, highly-abstract representation of signals that provides a common sensory language to record insights about the environment.

The HAL provides the PerceptiveOS operating system and APIs that supports specialized Software-defined Sensing Applications such as radar apps, camera apps, lidar apps, motion apps, or any hybrid variety.

Remotely, the Perceptive Cloud software ecosystem provides a development environment where users can write, simulate, compile, and deploy sensing software in a virtual, physically accurate environment.

The Perceptive Platform revolutionizes how autonomous intelligence senses and makes sense of the world, and will redefine the way autonomous vehicles are conceived and built. More than a solution for autonomy, it’s a platform for generating solutions that will continue to redefine the future of autonomy.

A New Kind of Architecture

The Perceptive solution is distributed across three main subsystems.

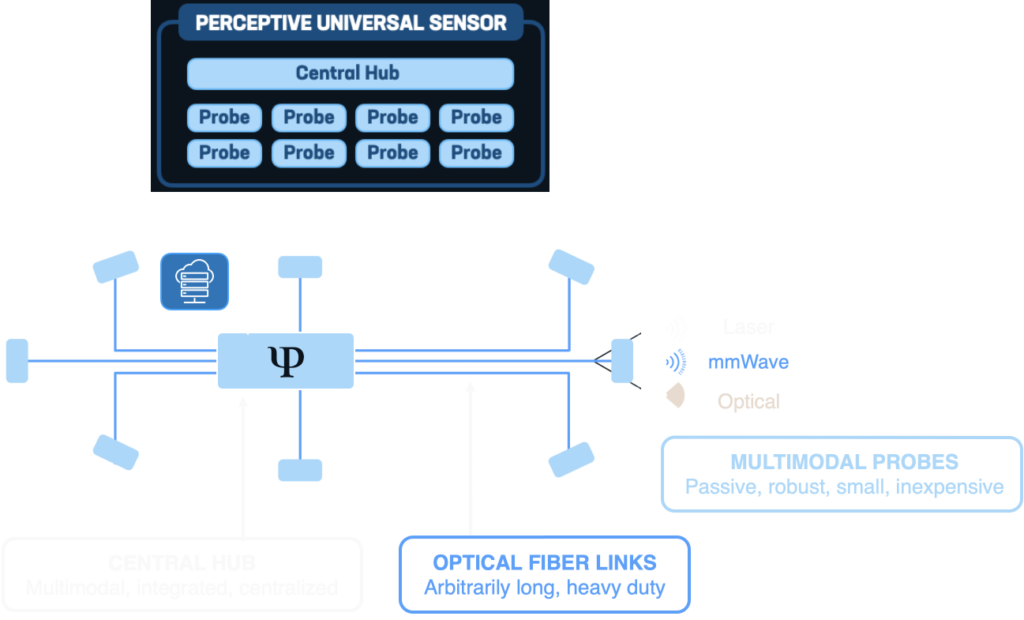

1. Universal Sensor

This full-vehicle sensor system includes a central hub hosted inside the vehicle’s cabin along with small, multimodal probes distributed along the vehicle’s perimeter. These probes convey signals to and from the environment through optical fibers which the central hub measures to transmit to a detached sensing computer. From this, a downlink enables the downstream software stack to reconfigure sensor hardware on the fly for active adaptive sensing. Optionally, third-party sensors, while not necessary, may also connect to the sensing computer.

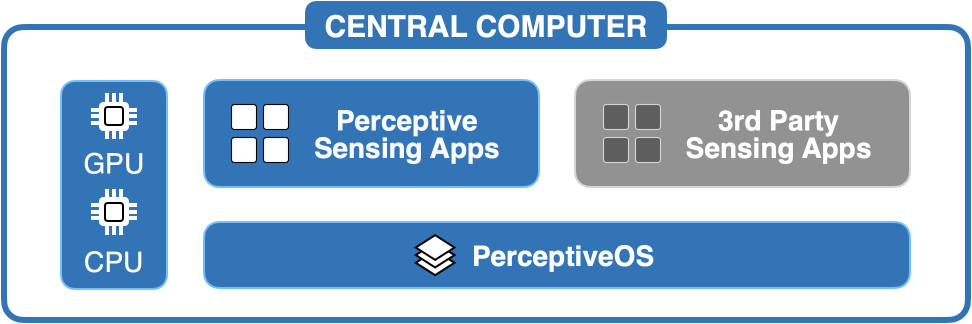

2. Sensing Computer

Three main software components run in the sensing computer: a) PerceptiveOS, a sensor hardware abstraction layer (HAL), b) Perceptive’s Concurrent Sensing apps (and optionally other third-party specialized sensing apps), and c) an API. PerceptiveOS gathers raw signals from sensors and maps them to a universal, raw-feature-based, probabilistic representation of the world. From this multimodal representation, Concurrent Sensing’s AI produces a single, global, multidimensional pointcloud (Hyper Pointcloud) in which each point is a long vector capturing multiple aspects of the object’s physics (e.g., surface properties, object material, orientation, velocity, spin, albedo, etc.). Finally, an API provides an I/O interface between PerceptiveOS, the sensing apps, and the client’s computer.

3. Cloud

A complete digital twin of Perceptive’s Universal Sensor and sensing software is hosted in the cloud, along with our AlphaSensing™ software. AlphaSensing™ can either learn user-provided digital signal processing software or derive its own without user intervention and compile-deploy software into the Universal Sensor and Sensing Computer.

Uncompromising Capabilities

Perceptive’s novel architecture redefines how autonomous intelligence solutions can scale, evolve, and drive the continuous improvement of autonomous vehicles. It dramatically expands both the software and hardware design space to include capabilities, form factors, economic models, and a universe of possibilities that were previously unthinkable. It’s more than a solution — it’s a generator of solutions.

Perceptive drives unparalleled improvements across the entire sensing system.

- Performance

- Scalability

- Development

- Integration

- Openness

Perceptive’s unique insights into an object’s physics dramatically enhance the system’s ability to identify, track, and predict the environment. Vehicles can see farther (>500 meters) with an unmatched level of detail (0.001º), even in adverse weather or with other impairments.

With Perceptive, entire new sensing and perception strategies can be drafted, coded, and virtually verified in the cloud with standard software tools (e.g., C++, Python, Pytorch, etc.) with a fraction of the engineering workforce required by today’s hardware-centric sensing stacks. This allows for rapid trial and error, which is critical for moving beyond present incremental progress.

Perceptive’s platform is radically simpler to integrate than today’s tangle of third-party devices. It provides an end-to-end solution with a single electrical access point, mechanical interface, data format, and API. Thanks to digital multiplexing, it’s built with only a fraction of the hardware components used in today’s systems and, because of that, has fewer points of failure, is more resilient to extreme environmental conditions, and is more respectful of the vehicle’s aesthetics.

Safety is a critical aspect of autonomous vehicles. An opaque sensing system made of third-party black-box solutions would be a liability for any AV developer. Because transparency, verifiability, software control, and data ownership are essential for any integrator ultimately responsible for passenger safety, Perceptive provides full access and control over hardware, software, and raw data at all stages: Universal Sensor, Sensing Computer, and Cloud.